"ValueError: Data cardinality is ambiguous" in model ensemble with 2 different inputs

I am trying a simple model ensemble with 2 different input datasets and 1 output. I want to get predictions for one dataset and hope model will extract some useful features from the second one. I get an error:

ValueError: Data cardinality is ambiguous: x sizes: 502, 1002 y sizes: 502 Make sure all arrays contain the same number of samples.

But I want it to fit for smaller dataset.

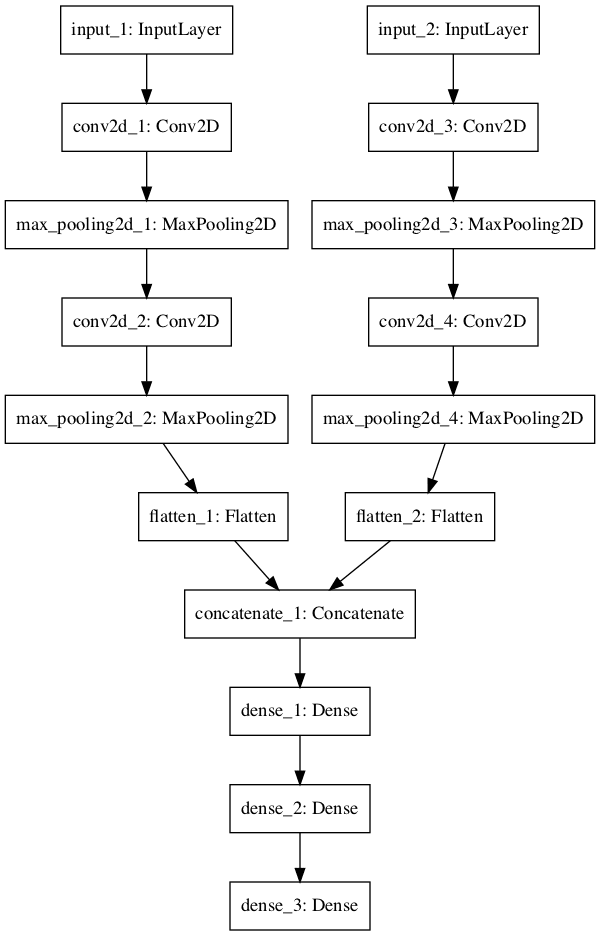

Architecture is like this:

Code:

import pandas as pd

from keras.models import Model

from keras.layers import Input, Dense, Flatten

from keras.layers.merge import concatenate

from keras.utils import plot_model

train_1d_X = pd.read_csv('train1d_x.csv').values

train_12h_X = pd.read_csv('train12h_x.csv').values

train_1d_y = pd.read_csv('train1d_y.csv').values

train_12h_y = pd.read_csv('train12h_y.csv').values

#model 1d

input_1d = Input(shape=train_1d_X.shape)

dense_1d_1 = Dense(16, activation='relu')(input_1d)

#model 12h

input_12h = Input(shape=train_12h_X.shape)

dense_12h_1 = Dense(16, activation='relu')(input_12h)

#merge

merge = concatenate([dense_1d_1, dense_12h_1], axis=1)

hidden1 = Dense(32, activation='relu')(merge)

output = Dense(1, activation='linear')(hidden1)

model = Model(inputs=[input_1d, input_12h], outputs=[output])

model.compile(loss='mse', optimizer='adam')

model.fit([train_1d_X, train_12h_X], train_1d_y, epochs=10, verbose=2)

Topic ensemble keras tensorflow regression deep-learning

Category Data Science